Living Instruments

Living Instruments is a collaborative project between Swiss musician Serge Vuille (under the umbrella of his project WeSpoke) and the DIY science crowd of the community laboratory Hackuarium.

It is a musical composition for a series of instruments that use bacteria, yeast and other living organisms to generate music. By interacting with the organisms and recording the sounds, gas bubbles, pressure, and movements they generate, the artists can transform data into music, creating a live, semi-improvised musical piece.

What

EN - This collaboration aims at three goals:

- To built a set of living instruments that use biological information or organisms as sources of signal.

- To compose a musical piece (creation) using these instrument and turning their output signal into sound.

- Place this project in the context of the current state of DIY, science and music.

FR - Cette collaboration a trois buts:

- Construire des instruments vivants (intégrant le vivant comme source de signal)

- Création d'une œuvre musicale (composition) en utilisant ces instruments

- Placer la démarche collaborative dans le contexte DIY, scientifique, et musical

Who is involved?

- Serge Vuille, musician

- Vanesa Lorenzo, product & media designer

- Luc Henry, biologist and science communicator

- Robert Torche, sound designer

- Oliver Keller, electronics and IT specialist

- We Spoke musicians

When

The final deliverable was a concert/performance that took place on February 10th 2016, at the music and performance art venue Le Bourg, in Lausanne, Switzerland. More information about the event can be found here.

The concert was followed by a workshop/masterclass at the Lift conference on innovation and digital technologies in Geneva, Switzerland, on February 12th 2016. More information about the workshop can be found here.

In August 2016, the performance was part of WeSpoke "carte blanche" at the International Summer Course for New Music in Darmstadt, Germany. It was presented as a workshop on "Biochemistry and white noise" on Saturday August 13th at the Lichtenbergschule and performed entirely on Sunday August 14th at the venue Centralstation.

EN - The piece was performed at two locations in 2016.

FR - La pièce a été jouée à deux reprises en 2016.

- 10 February: Concert, Le Bourg, Lausanne, Switzerland

- 14 August: Concert, Centralstation, Darmstadt, Germany

EN - The piece was performed at three locations in 2017.

FR - La pièce a été jouée à trois reprises en 2017.

- 3 April 2017: OTO Project - Instruments exhibition and workshop

- 4 April 2017: Concert - Kammer Klang series, Cafe OTO, London, UK

- 20-21 May 2017: Exhibition and performance during the Nuit des Musées - Centre Dürrenmatt, Neuchâtel, Switzerland

- 8 September 2017: Concert - Klang Moor Schopfe Festival, Gaïs, Switzerland

EN - The piece was already performed at one locations in 2018.

FR - La pièce a déjà été jouée en 2018.

- 31 January 2018: Concert - Le Bourg, Lausanne

What's Next

So far, the following tour dates have been confirmed:

- 21 October 2018: Concert and workshop - Zürich

More to come! And if you want to help us bring Living Instruments to new audiences, get in touch! In any case, we will update you on the next residence and events dedicated to bringing living instruments to life!

Description of the installation

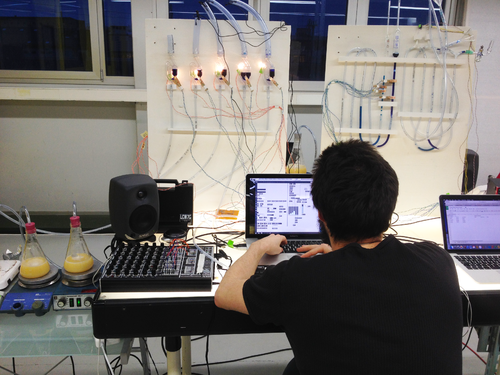

The following instruments were used for the world premiere performed at the music venue Le Bourg in Lausanne on February 12th 2016, and later at the International Summer Course for New Music on August 14th 2016.

Bubble Organ

The concept

The Bubble Organ is an instrument powered by fermenting yeast cultures.

This video of the first minutes of the performance will give you an idea of the sounds produced by this instrument.

The setup

The following items are necessary for the construction of the Bubble Organ:

- 5x Erlenmeyer flasks (1x 10L, 1x 4L, 1x 3L, 1x 2L, 1x 1L, 1x 0.5L)

- 5x S-shaped "Double Bubble" airlocks for beer and wine fermentation

- 5x 1500mm plastic tubing (6x1.5mm)

- 10x LED

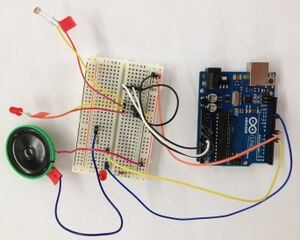

- 1x Arduino MEGA equipped with a USB host shield.

- 1x 12V power supply

- 1x USB cable 2m (Arduino to computer)

- 1x ION Discover keyboard USB

- 1x USB cable 1m (keyboard to Arduino)

The circuit

The controller for this instrument was based on a Arduino Mega equipped with an Arduino USB host shield.

The complete code can be found here.

The software

Tube Log

Moss Carpet

The concept

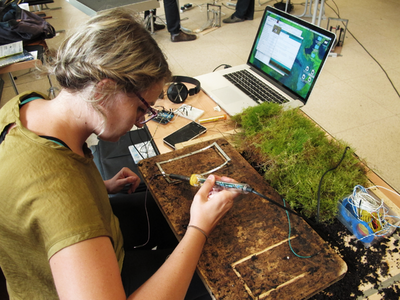

The moss carpet was designed and realised by Vanesa Lorenzo.

A moss - human interface conceived back in 2013, developed further as an interdisciplinary research about natural bioreporters research project on indicator species, such as moss and lichens, through which we can sense the environment and the climate changes. Mosses are tiny organisms, the first plants emerged from the ocean to conquer the land; unique and delicate native species with slow temporalities of growth which can decipher the secrets of life on Earth. They are pioneer species that can live in very harsh conditions, which can also provide important microhabitats for an extraordinary variety of organisms and plants. The interaction with mossphone has a ludic sound feedback that changes depending on the human that is in contact. When is touched, the human becomes, in a way, part of the same element changing its resistance (capacitive sensor) and this changes are data that is captured to be converted into sound. The result is the illusion that the moss is alive and reacts to our way of touching it, like if it were singing, snarling, murmuring or growling.

This video will give you an idea of the sounds produced by this instrument.

Electronic circuit with an active capacitive sensor or anthena: the organic interactive object invites to be touched and reacts to the arousal of physical contact emiting real-time sinuosoidal sound feedback through a serial communication with Pure Data or MaxMsp.

First prototype First Iteration, magic moss.

[1] Moss growing old, but wise with other living intruments.

The setup

Moss 1 receive anthena: Conductive thread or metal wires and 2,2 Mega Ohm resistance (x 2)

The circuit

The controller for this instrument was based on a Arduino Uno. Full instructions here

The software

This software uses

/* * CapSense Library * Paul Badger 2008 * IMI version for 12 sensors and send to Max/MSP (use regexp to decode) */

Full software here

Virtual Soprano

It detects your face expression and turns it into vocal sounds, like a virtual soprano. All documents here

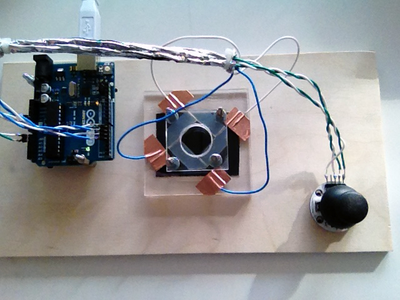

Paramecia Controller

The Paramecia Controller is a setup inspired by

Paramecia Bolero

Log

The details of the preparation are recorded live here

A budget and shopping list for this project will be shared later on.

Final days 01-08.02.2016

When?

Every day 1st-8th February 10am - 8pm (or midnight.. depending on the day)

Who was there?

- Serge Vuille

- Vanesa Lorenzo

- Luc Henry

- Alain Vuille

- Oliver Keller

- Robert Torche

- We Spoke musicians (7-8th Feb)

What?

Paramecia controller

After several prototypes, the SeePack microscope camera was replaced by a Logitech C270. The device was built according to previously described design (instruction video here) except the bottom acrylic glass plate was kept intact (with a piece of dark paper underneath). A rubber joint was also added between the two acrylic glass plates (see picture below). The joystick was the Play-Zone Arduino PS2.

The first prototype, used in the first performances looked like that:

A second version was made for the performance in Gaïs in September 2017.

Urs from GaudiLabs has also described a similar module (instruction video here).

N/O/D/E Festival

What?

Several prototypes of the Living Instruments project were featured at the N/O/D/E digital culture festival.

The 2016 edition was about Algoritmes and Big Data and hosted a theremin annual meet up.

The festival is organised By Association Longueur d’Ondes (coordination & programmation: Coralie Ehinger & Julie Henoch).

Where?

The Meet & Geek Laboratory

2nd floor at "Pole Sud" Cultural Center in Lausanne.

When?

30.01.2016 from 9am to 6pm

Who was there?

- Vanesa Lorenzo

- Luc Henry

- Alain Vuille

Construction weeks 25-29.01.2016

When?

Every day 25th-29th January 10am - 6pm (or midnight.. depending on the day)

Connecting FaceOSC to MaxMSP to tryout sound patterns.

Who was there?

What?

Blubblob Pond

Blob with sea water organisms from a local pet store in a crystal ball, with camera, laser cut structure on transparent polyvinyl and metal screws and nuts.

Connected to MaxMSP.

Facetracker Tree

Tryouts with soundpatterns.

Construction weeks 18-25.01.2016

When?

Every day 11-16th and 18th-25th January 9am - 5pm (or midnight.. depending on the day)

Who was there?

- Serge Vuille

- Vanesa Lorenzo

- Luc Henry

- Alain Vuille

- Gilda Vonlanthen

- Olivier Keller

Building the modules

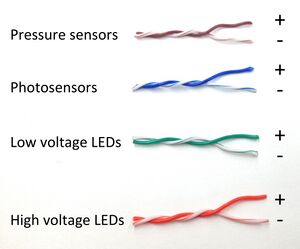

Wiring:

The whole system was wired using twisted-pair cables from Ethernet cables.

To avoid confusion, the 4 types of components were wired using a colour code (see picture below).

Fermentation cultures:

We used pure brewing yeast cultures growing in YPD medium and feeding on glucose to produce CO2 gas:

- Starting from 2mL dense culture (from overnight growth from plate) in 250 mL fresh medium.

- The next day, the dense culture were complemented with 50 mL of 40% glucose solution (not sterile) -> 8% final glucose concentration.

- The cultures started producing CO2 after 30 min (2 mL CO2 per minute)

- The cultures could be used after 90 minutes (5 mL CO2 per minute)

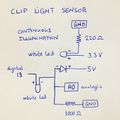

Bubble sensors:

Bubble sensors we built using a white LED and a photodiode. The setup was inserted in a wooden clothespin.

An option for the calibration of the sensors was explored based on a tutorial proposed by Arduino.

Microphones:

Microphone/Headphone Amplifier Stereo DIY kit (MK136, Velleman) were purchased from Distrelec and build according to instructions.

Paramecia tracking device:

SeePack microscope camera (see picture below) was mounted on our Carl Zeiss Axiolab E re microscope.

The EasyCapViewer 0.6.2 software was used as a player to record live images.

Mossphone:

Hunting the moss, building the platform and wiring it to Arduino.

Program in IDE Arduino.

HOW TO PROGRAM/WIRE TO ARDUINO AND COMMUNICATE WITH MAXMSP soon on Github.

FacetrackerTree:

A device that tracks human expression and a code to translate it to sound patterns.

Code on OpenFrameWorks here FaceOSC [2]

HOW TO COMMUNICATE WITH MAXMSP soon on Github.

Prototyping Week-end 19-21.09.2015

When?

Saturday 19th September 11am - 7pm

Sunday 20th September 10am - 8pm

Monday 21st September 10am - 6pm

Who was there?

- Serge Vuille

- Vanesa Lorenzo

- Luc Henry

- Michael Pereira

Brainstorming Session

We spent those three days brainstorming at UniverCité, trying to design 'instruments' that we could play, based on fermenting yeast cultures.

The 'instruments' we envisage would be driven by living organisms that produce work we can turn into sound. The most obvious example is fermentation (microorganisms eating glucose and rejecting alcohol and CO2) producing a gas that one can use to make bubbles, sounds, etc.

These instruments can fall into 3 categories:

LIVE - AUTONOMOUS AND COMPUTER INTERACTION No need for a human being to work in order to produce a sound from the living organisms activity.

- Gas producing fermentation broth (Bacteria and yeast) and gas-machine interactions

- Insects (moths, fruit flies) movement recognition software

LIVE - HUMAN INTERACTION A human subject will read a score written by living organisms and play an 'instrument' according to this score

- Keyboard and microscopic score -> observe microorganisms in water from local pond -> organisms move in microscope field on "score" slide

RECORDED - COMPUTER INTERACTION A recorded signal from an organism is used as a score and played either directly by a computer or by a human subject.

- Using for example genomic data (from the organisms used in other 'instruments') to generate sound. This could be simple (ATGC into tunes) or complex (use of amino acids triplex).

Building the first prototype

Together with Serge Vuille, our musician in residence, we designed and built a series of living instrument prototypes.

(Thanks biodesign.cc for the soldering iron!)

Overview of the prototype:

Before we go into too much details, you can check the video!

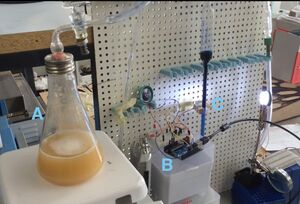

Now a bit more details. Below you find a picture of the setting, the recipe to produce CO2 from yeast fermentation and a scheme of the bubble detector device.

Tubing set-up:

A. is the 250mL yeast culture producing the CO2.

B. is the electronic circuit that allows our Arduino UNO board to make a variable sound when gas bubbles are going through the glass tube filled with ink-coloured water.

C. is simply a photodiode that will sense white light (from white LEDs) when the bubbles pass by.

Fermentation cultures:

We used two different types of cultures to produce CO2 gas:

As a test, we used random culture from rotting fruits juice.

- Blackberries were squashed into a juice and diluted with 1/5 water (40 mL into 160 mL) and the mixture stirred at room temperature. There was an immediate production of CO2 gas.

To get something more reproducible, we used pure brewing yeast cultures feeding on glucose.

- The composition of the overnight medium was as follow: 2.5g YE, 5g Peptone, 5g glucose